If you have not already done this, then you will need to configure Hive to act as a proxy user. This is done by adding the hostname to in Hadoop's core-site.xml file. If you have already set up HiveServer2 to impersonate users, then the only additional work to do is assure that Hive has the right to impersonate users from the host running the Hive metastore. If the data in your system is not owned by the Hive user (i.e., the user that the Hive metastore runs as), then Hive will need permission to run as the user who owns the data in order to perform compactions. mode nonstrict (default is strict)Ĭonfiguration Values to Set for Compaction true (default is false) (Not required as of Hive 2.0) In addition to the new parameters listed above, some existing parameters need to be set to support INSERT.

COMMANDS HIVE DRAWIT UPDATE

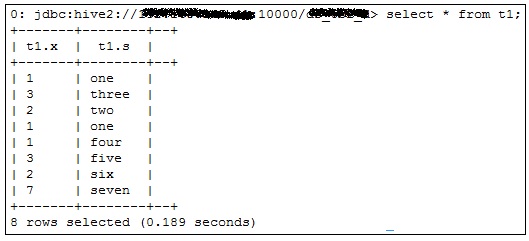

Update : hive>update testTableNew set name = 'updateRow2' where id = 2 ĭelete : hive>delete from testTableNew where id = 1 Ĭonfiguration Values to Set for INSERT, UPDATE, DELETE Insert : hive>insert into table testTableNew values (1,'row1'),(2,'row2'),(3,'row3') hive>create table testTableNew(id int ,name string ) clustered by (id) into 2 buckets stored as orc TBLPROPERTIES('transactional'='true') See below built table example with ORCFileformat, bucket enabled and ('transactional'='true'). Tables in the same system not using transactions and ACID do not need to be bucketed. Tables must be bucketed to make use of these features. The feature has been built such that transactions can be used by any storage format that can determine how updates or deletes apply to base records (basically, that has an explicit or implicit row id), but so far the integration work has only been done for ORC. Only ORC file format is supported in this first release. Restart the service and then delete command again : hive>delete from testTable where id = 1 įAILED: SemanticException : Attempt to do update or delete on table default.testTable that does not use an AcidOutputFormat or is not bucketed. In order to use insert/update/delete operation, You need to change following configuration in conf/hive-site.xml as feature is currently in development. Restart the service and then try delete command again :įAILED: LockException : Error communicating with the metastore. To support update/delete, you must change following configuration. It is been said that update is not supported with the delete operation used in the conversion manager. hive>delete from testTable where id = 1 įAILED: SemanticException : Attempt to do update or delete using transaction manager that does not support these operations.īy default transactions are configured to be off. Now try to delete records, you just inserted in table. hive>insert into table testTable values (1,'row1'),(2,'row2') Then, try to insert few rowsin test table. Once you have installed and configured Hive, create simple table : hive>create table testTable(id int,name string)row format delimited fields terminated by ','

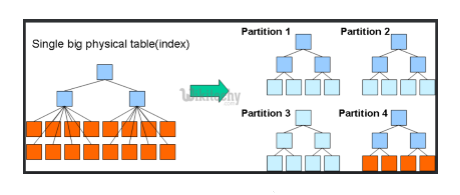

Large sets of immutable data (like web logs).Ī way to work around this limitation is to use partitions: I don't know what you id corresponds to, but if you're getting different batches of ids separately, you could redesign your table so that it is partitioned by id, and then you would be able to easily drop partitions for the ids you want to get rid of. Hive is not designed for online transaction processing and does not offer Interactive data browsing, queries over small data sets or test queries.

Hive aims to provide acceptable (but not optimal) latency for Iteratively with the response times between iterations being less than a few Significantly smaller amount of data but the analyses proceed much more As a result it cannot beĬompared with systems such as Oracle where analyses are conducted on a Involved are very small (say a few hundred megabytes). Latency for Hive queries is generally very high (minutes) even when data sets Incur substantial overheads in job submission and scheduling.

Here is what you can find in the official documentation: Hadoop is a batch processing system and Hadoop jobs tend to have high latency and There is no operation supported for deletion or update of a particular record or particular set of records, and to me this is more a sign of a poor schema. The following applies to versions prior to Hive 0.14, see the answer by ashtonium for later versions. You should not think about Hive as a regular RDBMS, Hive is better suited for batch processing over very large sets of immutable data.

0 kommentar(er)

0 kommentar(er)